How to Convert SEO to AI SEO: 5 Pillars Strategy

I remember the day after Google I/O 2025. My old playbook felt like tuning a radio. Now, working with reasoning models and persistent context, content planning feels like talking with a smart friend who already knows a little about me.

My goal here is simple: I’ll show you exactly how I convert SEO to AI SEO using a five-pillar strategy, so your brand maintains visibility even as clicks change. This is practical work — intent architecture, strong snippets, and agentic workflows that help search agents and users alike.

I’ll explain the big shift in plain words. Search now stitches passages and summaries, not just links. That changes how I write content, measure outcomes, and track citations in new AI surfaces.

If you’re curious about what AI is truly capable of, this Google AI Studio Review is for you. I’m going to walk you through everything from having a live conversation with an AI that can see your screen, to generating photorealistic videos from a single image, and even building a fully functional web app with a simple sentence. Let’s start.

Disclosure: BBWebTools.com is a free online platform that provides valuable content and comparison services. To keep this resource free, we may earn advertising compensation or affiliate marketing commissions from the partners featured in this blog.

🎯 Key Takeaways

- I redesigned content for passage-level retrieval and instant answers.

- Five pillars cover intent, structure, evidence, workflow, and measurement.

- Rankings matter, but citations and visit quality now guide decisions.

- Tools like structured data and geo-tactics speed results without compromising trust.

- I’ll share real mistakes and play‑by‑play tips you can use right away.

📘Why I Realized Classic SEO Wasn’t Enough after Google I/O

Standing inside the I/O keynote, I felt the ground shift under how search works. The demo wasn’t about links — it was about answers stitched from many sources. That moment rewired how I think about content and the user experience.

The moment it clicked: from blue links to answers

Google showed AI Mode building a reply from passages across pages. Fan‑out queries expanded one question into many. Reasoning chains weighed evidence. Personalization tuned results by embeddings.

My early mistake: optimizing pages while Google retrieves passages

I admit I kept polishing full pages for rankings while the engine pulled single passages as summaries. My lead paragraphs were long intros, not mini‑answers.

Impressions rose and clicks fell in my data. That drop wasn’t failure — it meant users were getting answers without a click. Marketing teams are already moving: roughly half use machine tools for seo tasks, and many content teams follow.

- Lesson: design content so it reads well as a short, extractable answer.

- Quick win: make lead paragraphs mini‑answers for agents and users.

🔍 The Big Shift: From Search Engines to Reasoning Engines

I began treating queries like a constellation of smaller questions that need stitching. That image helps me explain what modern systems actually do.

Fan-out queries, memory, and personalization in plain English

When I ask a question, the system quietly spawns many follow-ups. It pulls short passages across pages, then combines them into one answer. Think of it as a machine that drafts a summary from many notes.

Memory matters: past searches and clicks build a soft profile. Results start to feel like advice from someone who remembers you.

Personalization flips how I plan content. Two users can see different sources for the same query, so I design pieces for intent patterns rather than a single keyword.

Zero-click reality: why being cited can beat being clicked

Zero-click does not mean zero value. A brand quoted inside an AI overview earns visibility and trust. Those citations often raise the quality of later visits.

Factor | What it does | Why it matters | Action I take |

Fan-out queries | Generates many synthetic queries | Increases chance of passage selection | Write clear, extractable paragraphs |

Persistent memory | Builds user profile over time | Personalizes sources cited | Target patterns of intent, not single terms |

Passage scoring | Ranks snippets from many documents | Decides who gets quoted | Optimize for short, evidence-rich passages |

📈 Trend Signals You Can’t Ignore Right Now

Right now, a few signals are reshaping what I track and why. Impressions are up 49% year over year, yet click-through rates drop as more answers appear on the page.

Impressions up, CTR down: what that means for visibility and performance

More people see results, but many get answers without clicking. I watch citations and on-page engagement alongside CTR.

What I track: impressions, AI citations, on-page time, and conversion rate. That mix shows true performance, not just raw traffic.

Conversational queries and intent patterns shaping rankings

Search queries are turning into multi-turn dialogs. I write pages that answer obvious follow-ups, such as “which one integrates with WordPress?” right in the paragraph.

That change means passage-level relevance and intent patterns now influence visibility more than single keyword density.

For more insights on how to optimize AI SEO read this article.

Signal | What I measure | Action I take |

Impressions ↑ | Visibility across features | Optimize short, extractable passages |

CTR ↓ | Quality of visits | Track citations and conversions |

Conversational search | Follow-up intent patterns | Write Q&A micro‑sections |

🧠 What Convert SEO to AI SEO Actually Means

Search no longer rewards long billboards; it favors short, verifiable passages that answer a clear need.

I think of the shift like moving from a billboard to an index card. A small card with three clear lines is easier for an agent to pick and cite than a 2,000-word essay.

From keywords to user intent vectors and passage-level relevance

Instead of chasing single phrases, I map the user’s journey and write passages for each need.

These intent vectors are simple: identify a user question, offer a mini-answer, then add one quick fact that proves it.

Designing content that agents can reason over, not just rank

I structure pages with a lead mini-answer, a concise summary, and well-labeled sections that can be extracted cleanly.

Markup matters: schema and GEO provide engines with the necessary hooks to extract the right two or three sentences into summaries.

- Write mini-answers at the top of each section.

- Label facts and cite sources so passages are verifiable.

- Map passages to user needs across the funnel.

Focus | What I write | Why it works |

Lead mini-answer | One clear sentence | Easy for agents to quote |

Concise summary | 2–3 supporting sentences | Gives context for reasoning |

Structured sections | Headings + markup | Improves extractability |

"🧠Be quotable, verifiable, and easy to stitch into a reasoning chain."

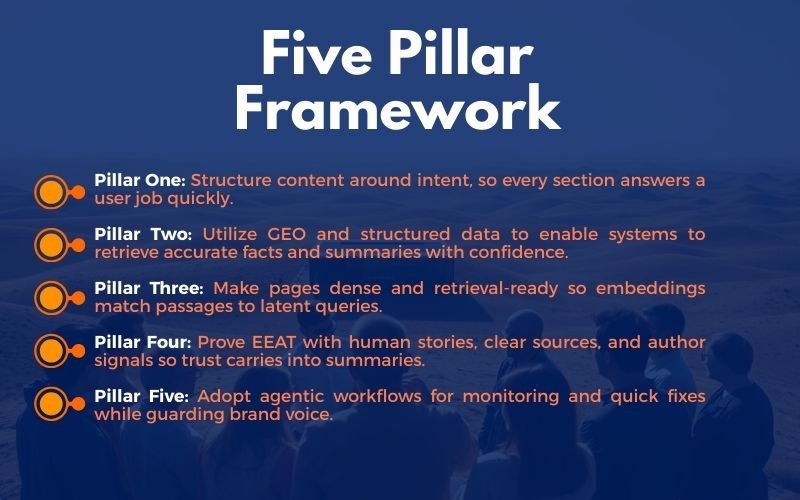

💡 The Five Pillars I Use to Convert SEO to AI SEO

When I sketched the five pillars, I wanted a simple map anyone could follow. Each pillar answers a concrete job for the user and for agents that pull short passages.

I preview what each pillar does and why it matters. The list below is practical and tied to real features I use.

- Pillar One: Structure content around intent, so every section answers a user job quickly.

- Pillar Two: Use GEO and structured data so systems can lift correct facts and summaries with confidence.

- Pillar Three: Make pages dense and retrieval-ready so embeddings match passages to latent queries.

- Pillar Four: Prove EEAT with human stories, clear sources, and author signals so trust carries into summaries.

- Pillar Five: Adopt agentic workflows for monitoring and quick fixes while guarding brand voice.

Payoff: better visibility in AI Overviews, steadier performance when clicks drop, and a stronger brand presence that survives changing search behavior.

“Make passages short, verifiable, and easy for agents to stitch into answers.”

Pillar One: Intent-Centric Content Architecture

I started mapping content like a road trip, with stops that answer the next obvious question. Each stage in the funnel gets a short passage that meets immediate needs and invites the next step.

Mapping user journeys to latent queries across the funnel

I map awareness, consideration, and decision as clear waypoints. At each stop I write a 1–2 sentence mini-answer that addresses the latent query for that stage.

Lead paragraphs as mini-answers for AI summaries

Quick tip: front-load a crisp 2–3 sentence answer at the top. I used to bury the point in paragraph four and lost citations. Short leads are extractable and boost citation likelihood.

Entity and schema foundations that clarify experience

I label entities, add schema, and include precise facts so machines read who, what, when, where, and why without guessing. That structured data helps my brand and content travel into summaries cleanly.

- Treat each subhead as a context window.

- Use keywords as signposts, not the main act.

- Keep passes verifiable with a fact or source line.

Pillar Two: Generative Engine Optimization and Structured Data

My first step was making facts obvious and easy for machines to read. I treat pages like a set of small cards that an engine can lift and stitch into an answer.

Schema markup, GEO, and rich snippets for precise extraction

I apply GEO tags to flag the parts of my content most likely to answer conversational questions. This helps search features find short, factual passages quickly.

Why it helps: clear schema and GEO increase the chance a snippet will include your brand and improve overall visibility in answer boxes.

Passage design: headings, summaries, and tight context windows

I design passages with short headings and a one-line summary labeled “why it matters.” That keeps context small and extractable for engines with tight windows.

- I use schema for FAQ, HowTo, and Product so facts are unambiguous and extractable.

- I test snippet-worthy blocks: 40–80 word answers, bulleted comparisons, and crisp data points with sources.

- I maintain a living checklist so every page ships with markup, summaries, and scannable passages.

Element | What I add | Key features | Benefit |

GEO | Context tags on answer passages | features, labels | Faster extraction by answer engines |

Schema | FAQ / HowTo / Product blocks | tools, structured data | Clear, verifiable snippets for search |

Passages | Short headings + “why it matters” | content, concise facts | Higher citation likelihood and visibility |

"💡Make each passage small, clear, and verifiable so it can be quoted."

These steps give quick wins: better feature inclusion, clearer citations, and easier page-level optimization. I keep the work light, repeatable, and measurable.

Pillar Three: Dense Retrieval Readiness

Embedding spaces turned my content work into neighborhood planning. Instead of hoping a whole page ranks, I map short passages as reachable spots in a meaning map. That change matters because modern systems find similar ideas by proximity, not exact word matches.

Why embeddings and semantic similarity beat older models

Think of embeddings as coordinates in meaning space. Passages that share a concept sit in the same “vector neighborhood,” so a single passage can answer many related queries. This is why dense retrieval outperforms TF‑IDF and BM25 for conversational search and research tasks.

Passage-level optimization and citation likelihood

I trim each paragraph so it makes one clear point, labels the fact, and links to a source line. Short, labeled passages are easier for engines and a machine reader to extract and cite.

When a passage is quoted, I log that win, note the data and wording that worked, and reapply the pattern across pages. Those small, repeatable insights lift my content and steady my rankings over time.

Pillar Four: EEAT with Human Storytelling

I learned more from a content flub than from any case study I read. I once published a guide with solid data but no human detail. Engagement was low despite good metrics.

After that, I rewrote sections with a short anecdote and a clear source line. Time on page rose and excerpts began appearing in summaries. That change taught me the power of lived experience.

First-hand experience, author signals, and transparent sourcing

Expertise matters: I add author bios, credentials, and updated dates so readers and agents know who is speaking. Clear bylines improve trust and make claims easier to verify.

Transparent sourcing: I cite studies and link to sources under key claims. Short source lines help summaries lift facts with confidence and boost visibility.

Personal stories that anchor data and build trust

Users remember a simple story more than a spreadsheet. I use plain narratives to make statistics memorable and to show real-world application.

- I share one small failure and what I changed—real experience beats slogans.

- I include author credentials and an update date for context.

- I place concise source lines under claims so summaries can verify facts.

Pillar Five: Agentic Workflows and Automation

I set up small, watchful agents so I stop reacting days after a drop and start fixing issues in hours. This pillar blends quick monitoring with humane decision-making.

AI agents for monitoring, analysis, and real-time adjustments

I use agents that watch rankings, CTR anomalies, and emerging queries. They surface odd patterns and propose metadata or structure updates in real time.

Routine tasks like schema refreshes, title updates, and dashboards are automated, so I free my team for strategic work and better creative output.

Balancing automation with authenticity and brand voice

Agents suggest edits, but humans approve anything that touches tone or promises. I protect the voice and keep final calls in my hands.

- I set agents to alert me on drops and spikes so I respond in hours, not weeks.

- I automate repetitive chores with reliable tools and keep strategy human-led.

- I track the lifts after agent fixes to prove ROI and choose what to automate next.

🔨 Tools, Features, and Workflows I Rely On

When a new query trend hits, I want capabilities that turn insight into a live page in hours. My stack blends analytics, fast creation, and publishing so I can test snippets and scale what works.

From analytics to content creation: capabilities that matter

I value tools that combine traffic data, structured data support, and content editors in one place. That lets me map queries, craft extractable passages, and add schema without hopping between apps.

Notable features: integrated analytics, model choices (OpenAI, Anthropic, DeepSeek, Groq), and meta tag generation for long posts up to 5,000 words. Recently, I started experimenting with Google Studio AI functionalities; read my review here.

Auto-posting, AI images, and bulk publishing without losing quality

I use auto‑posting to WordPress and AI images to speed publishing. Free image generations on paid plans cut costs, and bulk mode helps launch a series quickly.

Bulk drafts get human edits for top pages so voice and facts stay sharp. I rely on Amazon product blocks when I need commerce content.

Pros and cons of automation across platforms

- Pros: speed, consistency, and faster updates across platforms.

- Cons: sameness risk and brand drift if humans stop reviewing.

- I like having multiple models so I can pick the best one for summaries, images, or long form content.

Capability | Why I use it | Outcome |

Integrated analytics | Quickly spot query shifts | Faster content pivots |

Auto-posting | Reduces publishing friction | Higher output with fewer errors |

Bulk mode + human edits | Scale drafts, preserve voice | Quality at scale |

📐 Measurement in a Zero-Click, Personalized World

Metrics must follow where users and agents actually find answers today. With summaries reducing clicks, I simplify what I track so data ties directly to business outcomes.

From rank tracking to conversation and citation analytics

I still monitor rankings, but I pair that with citation counts in AI overviews as a proxy for visibility. Google reports higher-quality visits via summaries, and many AIO placements are invisible to logged-out rank tools.

What I log:

- How often my brand appears in summaries and answer boxes as a visibility proxy.

- Conversation patterns from site search and chat to spot shifting intent and content gaps.

- Query-level AIO signals when advertiser data is available; otherwise, proxy with citations.

Quality of visits over quantity: aligning metrics with outcomes

I segment visits by conversion quality. Fewer, high-value sessions beat many low-quality clicks. That reframes success in a zero-click world.

🧠 Content Formats for a Multimodal, Multiplatform Landscape

I plan content now by imagining how a single idea unfolds across four formats. AI Mode reads video, audio, transcripts, and images, so I design each asset to be extractable and clear.

Text, video, audio, and data visuals for AI Overviews

One idea, four outputs: I craft a concise article, record a brief video, capture an audio clip, and create a simple chart. Each asset has a clear label and a one-sentence summary, allowing agents to select the relevant passage.

Make media readable: I add transcripts, timestamps, and alt text so machines and users can “see” what’s inside. That extra step boosts the chance my facts are cited in answer boxes.

- I write captions with sources and a short fact line under visuals to increase trust and snippet readiness.

- I choose platforms that expose structured data so my media is not a black box for systems that pull answers.

- I keep creation lean: reuse a single script across formats and label each file for clean extraction.

Quick win: plan one idea, ship four assets, and add transcripts. That simple workflow raises cross-platform visibility while keeping work efficient and user-friendly.

🧠 Brand Strategy for the Agent Era

Brands now compete for the lines an agent will use, not just the clicks a reader might give.

I tell simple stories so agents and users can both repeat our message without distortion. I start with a one-line promise that names what we do and who we help.

Designing messages for users and the agents that interpret them

I write passages that carry my brand point of view in plain language. Short, labeled passages make it easy for engines and real people to find the same truth.

Practical checklist:

- I define my brand’s one-line promise so an agent can summarize me cleanly.

- I craft 1–2 sentence passages that show my POV and answer a user need.

- I align topics with what my audience asks in conversation, not just competitor keywords.

- I keep trust builders—bios, dates, and concise sources—consistent so brands earn citations in answer surfaces.

⛔ Common Pitfalls When Teams Try to Convert SEO to AI SEO

Teams often trip up by treating the new landscape like a minor update rather than a platform shift. I’ve made that mistake: tweaking titles and hoping for different outcomes.

Chasing keywords while ignoring intent and reasoning

Keyword-first pages feel safe but often fail. When content prioritizes keyword density over a concise answer, agents skip the page and cite other sources.

Fix: write short, labeled passages that answer a clear user intent and include one quick fact for verification.

Underestimating personalization and logged-in variability

Many tools still rely on sparse retrieval and logged-out rank tracking. I learned that testing in one browser overlooks how embeddings and profiles affect user results.

Fix: sample results across accounts, devices, and regions, and treat citation patterns as part of performance analysis.

“Better visibility comes with trade-offs: higher quality visits but more volatility and measurement gaps.”

- Pros: improved visibility and richer user value.

- Cons: more volatility, gaps in rank data, and the need for deeper analysis of patterns and data.

🎯 Roadmap: My 90-Day Play to Make the Shift

I mapped a tight 90-day plan so teams can act without overthinking every change. This plan breaks work into clear weeks and keeps the focus on simple wins: research, structure, publish, and measure.

Week-by-week focus: research, rebuild, and refine

Weeks 1–2: research intent patterns, list priority questions, and draft mini-answers for top pages.

Weeks 3–4: add schema and GEO, tighten headings, and create extractable summaries.

Weeks 5–6: publish one multimodal piece per week; add transcripts and structured captions.

Weeks 7–8: set up agent monitoring for anomalies; document wins and misses.

Weeks 9–12: scale what worked, retire what didn’t, and share insights with stakeholders to keep momentum.

Tracking early signals and doubling down on what works

Use analytics to monitor AI summary visibility and conversation patterns. Let tracking flag pages with strong performance and those that need tweaks.

Signal | What I watch | Action |

Visibility | AI citations & impressions | Tighten passages |

Engagement | Time on page & conversation clicks | Refine content and CTAs |

Quality | Conversion and brand lift | Scale top formats |

📚 Conclusions

The real test now is whether a brand’s answers travel as well as its links once did. The landscape has shifted: reasoning-driven search favors short, verifiable passages and brands that adapt first gain a lasting advantage.

I offer a one-week checklist you can act on: intent-led structure, GEO and schema, passage-first writing, clear author signals, and agentic monitoring. These five pillars make creation repeatable and measurable.

Quality beats quantity today. Tight, trustworthy passages earn more citations than long, vague posts. Run small experiments, log what works, and use light automation for routine fixes so your voice stays human.

Build for users and the agents that help them. Do that, and your visibility, rankings, and long-term advantage follow.

FAQ: Convert SEO to AI SEO

What do you mean by converting SEO to AI SEO?

I mean shifting from keyword-first tactics to an intent- and passage-focused approach that helps reasoning engines and assistants surface my content. This includes using embeddings, structured data, and content designed as concise, answerable units, so that search and AI agents can cite and summarize it reliably.

Why did classic search tactics stop working after Google I/O?

I noticed Google and other engines started returning direct answers, passages, and multimodal results instead of just blue links. That made traditional page-level keyword chasing less effective. Visibility now depends on being understandable at the passage level and matching intent signals rather than just ranking for isolated phrases.

How do reasoning engines differ from traditional search engines?

Reasoning engines interpret user intent, combine signals from multiple documents, and generate synthesized answers. They rely on memory, personalization, and semantic retrieval rather than pure keyword matching, so content must be structured for extraction and reuse by agents.

What are the immediate trend signals I should watch?

Pay attention to rising impressions with falling CTR, increased conversational queries, and passage-level citations. Those patterns signal zero-click behavior and the need to optimize for visibility in summaries and featured answers, not only for organic clicks.

How do I design content for agents to reason over?

I break content into clear lead paragraphs, concise micro-answers, and well-labeled passages. I add schema and entity signals, prioritize factual sourcing, and create semantic clusters so embeddings can match content to latent user queries.

What is intent-centric content architecture?

It’s mapping user journeys to latent queries across the funnel—creating pages and passages that answer high-intent questions, support discovery, and supply the short, factual snippets agents pull for answers. I use entity models and topical hubs to connect related content.

How does structured data help with generative engines?

Schema markup, clear headings, and geo or product details improve precise extraction. Structured data signals the purpose of each passage, enabling generative systems to cite and use my content more confidently in answers and snippets.

Why are embeddings and dense retrieval important?

Embeddings capture semantic meaning, allowing dense retrieval to find relevant passages even when the wording differs. That increases the chance a passage from my site is cited by an assistant or model, outperforming classic text-matching approaches like TF-IDF.

How do I maintain EEAT while using generative tactics?

I combine transparent author signals, first-hand experience, and clear sourcing with engaging storytelling. The human context enhances trust, making my content more likely to be selected and credited by models and agents.

Can automation and AI agents replace my editorial team?

Automation helps with monitoring, drafting, and scaling workflows, but I balance it with human review to preserve brand voice and accuracy. Agents excel at tracking signals and suggesting changes; humans validate and add narrative authority.

What tools and analytics should I rely on?

I utilize analytics platforms that provide insights into conversation and citation metrics, embedding libraries for similarity testing, CMS features for structured content, and publishing tools that enable bulk updates without compromising quality. These capabilities let me track intent, performance, and brand impact.

How do I measure success in a zero-click world?

I track citations, assistant impressions, quality of visits, downstream conversions, and engagement on answer cards—shifting from rank obsession to outcome-oriented metrics that reflect how agents surface and reuse my content.

What content formats work best for a multimodal landscape?

Mixing text with video, audio, and data visualizations enhances coverage across various agent pipelines. Short, captioned videos, structured transcripts, and clear visual captions help models understand and extract key facts across formats.

How should brand messaging change for the agent era?

I design concise, authoritative messages that serve both users and the agents that interpret them. That means consistent entity signals, a clear brand voice, and modular content pieces that agents can combine without losing context.

What common mistakes do teams make when shifting?

Teams often chase keywords while ignoring intent, skip passage-level optimization, and underestimate the importance of personalization. They also over-automate content without human oversight, which harms quality and brand trust.

What does your 90-day playbook look like?

I split it into research, rebuild, and refine. Early weeks focus on intent mapping and pilot passages. Mid-phase implements structured data and dense retrieval tests. Final weeks track signals, iterate on high-impact pages, and scale what proves effective.

Which additional keywords should I include when optimizing my FAQ?

Include terms like intent, embeddings, analytics, personalization, passages, agents, citations, conversion, rankings, workflows, content quality, structured data, monitoring, automation, and brand experience to cover the full spectrum of visibility and performance concerns.